The “Audio Myth” of 24-bits vs. 16-bits

I’ve had some back and forth with a few readers following my negative assessment of an “educational” piece posted on a high-res audio site written by an esteemed member of the audiophile press. You can read the piece and the comments by clicking here. In its simplest form, the issue is whether adding 8 additional bits to each of the sample values in a PCM system increases the “resolution” of the system by providing more discrete values over the same total audio signal OR do the additional discrete values provide greater dynamic range by extending the range of the system.

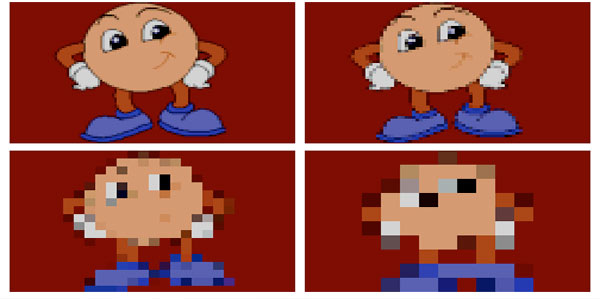

I’ve read the case for the former position before. I try to point out to those claiming more “volume levels” is similar to more pixels making an image more realistic are making the wrong analogy. Digital audio doesn’t work like that. But I’ve been unable to make the facts clear through my posts and follow up comments.

So I went to my digital expert…I sent a comment or two off to John Siau, the chief designer and principal at Benchmark Media. This is the guy that has mastered electrical engineering (analog and digital) and the art AND science of designing what I believe is some of the finest audio equipment on the planet…regardless of cost (and no, there ads on my site are not paid!). The DAC2 HGC is among the finest digital to analog converters I’ve ever heard and I’m looking forward to auditioning the new AHB2 power amplifier.

After a day of waiting, John (who is a very busy guy) wrote me back with a detailed explanation of the issue. He even made the subject into an article, which he posted to the Benchmark site (click here to read the entire article). Here’s the concluding paragraph of his piece:

“24-bit word lengths provide a very efficient method of improving the noise performance of digital systems. When dither is properly applied, there is no advantage to long word lengths other than noise. All quantization errors in a properly dithered digital system produce a random noise signal. Properly dithered digital systems have infinite amplitude resolution. Long word lengths do not improve the “resolution” of digital systems, they only improve the noise performance.”

The article is an excellent and very detailed survey of digital encoding and the impact of increasing word lengths and sample rates. I was particularly impressed with the very thoughtful way he opened up the piece with an analysis of bit length in digitizing system. He states:

“Every added bit decreases the system noise by about 6 dB when measured over the entire Nyquist bandwidth of the digital system. The Nyquist bandwidth is equal to 1/2 of the sample rate and it defines the absolute limits of the frequencies that can be conveyed by a digital system. We can improve the audio-band SNR by increasing the word length, by increasing the sample rate, or by increasing both. In all cases, a properly dithered digital system has infinite amplitude resolution (just like any analog system). When properly dithered, the errors produced by digital quantization produce nothing other than white noise! This surprising fact is poorly understood by many.”

This is basically what I’ve been teaching for many years to my students…but I’ve been doing it in reverse. When John says “every added bit decreases the system noise by about 6 dB”, he’s saying the same thing as “for every 1-bit we achieve about 6 dB of signal to noise ratio in the system”.

It’s the end result that matters…it’s not really important in understanding the benefits of higher sample rates or longer word in technical terms. The superiority of a high-resolution PCM-based audio recording over analog technologies and the DSD format are a lower noise floor, more accurate reproduction of an extended frequency response, lower distortion, and ease of use.

I encourage everyone to read John’s excellent article. He’s an expert and writes without the excessive marketing spin that populates so many authoritative “white papers”.

I hope this puts this issue to rest. Although, when I get a moment back in LA, I may create some illustrations to accompany his article.

Another thoughtful article. Speaking of myths and such, and if you haven’t thought of it already, I suggest an extended interview with JJ Johnston (ex-Bell Labs, modern codec guru, all-around no nonsense devotee to the scientific method). He recently got sucked into the avsforum “debate” that is somewhat related to the test files you created with Scott, and I am perpetually impressed with his depth of knowledge, common sense and professionalism. If you ever want to conduct a formal test of HR audio, getting to know JJ and getting his insights on how to set it up and interpret results would be invaluable. I’d guess you’d get along well.

Another ex-Bell labs guy, Alan Bell, is a friend…although it’s been many years. Thanks for the recommendation…I’ll see if I can contact him.

I agree with this post says, but it is kind of counter intuitive, even if i studied signal theory.

Perhaps the noise level difference is not so big between 16 and 24 bits, but to take a more obvious example the jump from 8bit to 16bit, in the old days of early PCs sound cards, is so obvious in terms of sound quality that it sounds not satisfactory to say that bit depth ‘only’ has impact on noise level? It *does* impact sound quality significantly.

Anyway, i’m interested in hearing your views on the topic if 192khz sampling vs 44.1, i’ve read so much misunderstanding of the nyquist frequency and the human ear frequency range only going up to 20khz. Theory’s ok but in practice it’s much more complex than this…

The piece by John Siau was very informative, I’m certainly glad that the discussion took place and that he had the time to write it. As for the sampling rate issue…there’s more to it than merely reducing noise. This is an area of research that seems to me to be the most important with regards to new recordings and high-resolution audio.

If you are thinking of old 8-bit systems as sounding distorted, that’s only because they weren’t employing proper dither. 8 bits is actually good enough for most mainstream pop and rock recordings.

Here’s a nice article that keeps the technical side very brief, but gives audio examples using only 4 bits. You can easily hear the effects — even over mediocre computer speakers or headphones.

http://www.tnt-audio.com/sorgenti/dither_e.html

This is more of a question, than a comment. I have 2 systems with 2 Ayre DACs & both PC & Mac operating systems. I think the initial recording quality is extremely important. Some CD’s or lower resolution recordings sound better than higher res recordings. My PC DAC will do 24 bit 192 hz, using Asio drivers. The Mac can only do 24/96. With both systems, I have double bought or recorded from vinyl using both 24/96 & 24/192,so I can compare. The most obvious difference, I notice on playback is the bass is less boomy with 24/192 & highs may be more vibrant. I don’t think I am deluding myself-Comments?

Barry…I’m up early today before heading down from SF to the California Audio Show in Millbrae. I’ll try to respond with a comments or post soon. Quickly, I have my doubts about the rigor or your comparison. If you’d like to put a couple of files on the FTP site…I could do a few tests, myself.

Excellent article by John Siau — provides clarification on this complicated subject that I did not know.

It’s very easy to confuse digital audio with digital video – I did it myself. With digital video an increase in bit depth increases the number of colours per colour channel. So, for instance an 8 bit image will only have 2^8 gradations over all three colour channels (red , green, blue) and will give 256 colours available in total (which will need to be dithered to avoid posterisation). A 24 bit image will give 2^8 x 2^8 x 2^8 (=2^(3×8),ie 2^24) colours, ie 256 gradations per colour channel.

The confusion arises because colours in video are equated with volume levels in audio, and 24 bit audio must therefore have 2^24 gradation of volume level from the “noise floor” to 0db, and 16 bits must have 2^16 gradations from the “noise floor” to 0db..

As I now know digital audio uses a set of superposing functions to recreate the waveform, and digital audio and video are therefore not analogous.

Trying to impress on people the differences between digital audio and video may prove difficult to a non-scientific audience, unless you can find a way of creating analogies and avoid resorting to mathematics.

I like to think of things in terms of steps. For video imagine a staircase between black at the base and white at the top; these two values are fixed so the more stairs we have between black and white (the more bits) the smaller the stairs have to be and the more colours we have. In audio whilst the zero dB value is fixed, the noise floor is not so the stairs remain the same size, when the bit depth increases the constant step size means the floor has to lower. So increasing from 16 to 24 bits adds 16711680 more step, which is quite significant and results in an increase in theoretical S/N from ~ 96.3dB to ~144.5dB.

Well put…this was my meaning from the start. We don’t increase the density of the stairs…we extend the number of them.

I understand the bit depth behind each pixel…the more the bits the larger the color palette that can be delivered to each pixel. What I see in articles intended for non technical types is the insistence that increasing the pixel density or DPI (dots per inch) is put forth as an appropriate analogy. This misses the mark too.

Mark,

This forum has seen many discussions of how the frequency spectrum captured during 96kHz recording relates to human hearing capabilities. But this article made me think about the relationship between human hearing capabilities and word lengths achievable in digital systems. I have no doubt that certain musical compositions with large dynamic range that are recorded with 16-bit word lengths will loose sonic information due to signals being present in the noise. But even though the digital noise floor is being lowered with every bit, I wonder if there is the point at which our human hearing gain no additional benefit from the lower noise? It seems unlikely, even in the most quiet environment that our hearing could cover 144db? Or is this not really an issue?

There is a point of diminishing returns…but if the technology is there to encompass the entire audible range of human hearing…why not take advantage of it. We don’t encounter 130 dB SPL in real life and there’s not a lot of music released that eclipses the limits of standard CDs. But it does matter to me…I want the bigger box because I don’t think we have all of the answers yet.

I feel like I have fallen down a rabbit hole here. Things are certainly getting curiouser and curiouser.

It is easy to understand formulas like 16 bit X 6db = 96db, 24 X 6 = 144db and 20 X 6 = 120db for low end DSD. Fine so far.

Here the thinking that of only 16 levels of loudness for the lesser of these seems wrong. If that were the case then only a 4 bit DAC would be needed. A 4.5 bit half bits for the others.

In reality the most significant (4) bits of the sample word give this rough graduation. The other bits must do something else or why have them.

The concept of a very large number of loudness graduations (volume) is also absurd. Effectively the human ear is believed to only be sensitive to detection of one Db or more change.

You can dress down the electronics professor who said that later.

Working on how DACS work, first starting with the earlier to the ultra sophisticated super linear DACS, they ultimately achieve the same output effect. The older resistor networks (2 different types) used to switch in the graduated resistance across a stable DC input.

This would jolt the signal up or down by the quantified level in the period (time domain) determined by the signal. The output signal is jolted up or down by the set amount reasonably quickly but the resultant signal is in no way smooth looking, or like the ragged stair case diagrams often show. Digital signals tend to not to be that tidy.

The issue here is that the 24 bit scenario gives may more smaller and more accurate nudges resulting in a smoother signal. This would also need some filter smoothing out, but with less complicated filters. It if the electronic signal that has these tiny shaping steps not the sound output.

Life is never that simple. Modern super linear DACs have an ingenious stepping mechanism. The input is processed using pulse width modulation giving a more refined result but not necessarily with less noise. The greatest advantage is once the input stream format is translated from its input “language” to a form driving the DAC. The input, be it PCM or DSD, the results are ultimately the same output method giving greater accuracy. Fine detail may be lost in the translation like for a DTS stream.

However the other article is also correct about the statement about noise reduction is by increased bits and faster sampling. The noise that is there is at very higher frequencies and easier to eradicate. A smoother signal is easily achieved therefore less noise.

It must be obvious that the “HD” signal must trace out a more accurate looking sound wave otherwise why bother with HD in the first place. (To “see” sound on the fly you need a fast oscilloscope)

The statement about giving 16 or 8 million loudness levels is very wrong. The signal that is shaped by many accurately quantified signals (20 bits or better) is known to render better HD playback otherwise it would be pointless to do in the first place.

The answer lies is in the accurate signal shape resulting and not the loudness that gives true HD.

The 24 or 32 bit scenario is a God send in the recording studio because of better dynamic head room during sound capture. This gives a bigger canvass to paint on.

The Nyquist frequency is another issue all together and for playback equipment designers to fuss over.

Clipping, as you know, is extremely important because of its own strange aliasing behaviour when digitally sampled. Bigger sampling words (24+) make avoidance of clipping more possible with good care, and an accurate tracing of soft signals when available during quieter passages. By keeping the preamplifiers too low during recording will cause loss of detail. This was covered with one of your earlier blog articles.

The statement:

“24-bit word lengths provide a very efficient method of improving the noise performance of digital systems.

When dither is properly applied, there is no advantage to long word lengths other than noise.

All quantization errors in a properly dithered digital system produce a random noise signal.

Properly dithered digital systems have infinite amplitude resolution.

Long word lengths do not improve the “resolution” of digital systems, they only improve the noise performance.”

Very wrong!

Sorry, I imagine you would like to close this subject, but the perpetual dissemination of bad info does little to promote HD.

Correct: The great great improvement of noise which is more easily managed.

The statement: “16 million levels of loudness”. This statement from another article was glib but contains some truth.

The revised statement: “There are 16 million levels of graduation available during the recording process.” would come closer to the truth. [that is for 24 bits]

The probability of finding all 16 million levels on any one, or series of recordings, is extremely low.

Guys, time to dust off the old DSP text books. (Digital Signal Processing).

A graph of a sound wave has the amplitude domain (X axis), the time domain on the Y axis. (like the sample rate of 9600 per second).

Noise only occurs only during repeated quantisation. Noise is always there but at different levels of severity. This is determined by both the X (bits) and Y axis (to get frequency)

The Nyquist bandwidth is equal to 1/2 of the sample rate and it defines the absolute limits of the frequencies that can be conveyed by a digital system.

A glimmer of truth but not fully accurate.

This effects the slower sampling rates more than for higher ones. This also is questionable because narrow band filers are not difficult to provide. The only issue would be on the

Y domain (sample rate) and absolutely nothing to do with the number of bits. Take note.

SACD recordings often not as bad as some audiophile enthusiats make out. They neatly fix the X domain with 20 bit quantisation (not bad) but sadly do not cater for the Y domain with remains the same for regular CD’s .

Neil…I admit that I will tread a little light here because I never did study DSP theory…I have only used it in my professional work on audio. But I would would like to know what about the initial 5 statements qualifies as “very wrong”. I’d welcome some additional clarification. Having discussed this with my friend John at Benchmark, one of the most knowledgeable gentleman on the subject that I can talk to, I’m curious where you feel things are incorrect.

I’m also confused with your discussion of the X and Y axis in the graphing of an audio waveform. The graphs that I’m used to and which are common on DAWs, use the X axis for the time domain (not the amplitude domain as you state above) and the y axis is used to the amplitude (exactly the opposite of what you stated).

Quantization noise is an artifact of PCM sampling. Dither is used to turn severe and specific noise into less objectionable random noise…that is one of the main reasons for the necessity of dither. When done correctly the resulting digitized and reproduced wave don’t have “amplitude levels” at all…not 65 K or 16 million.

I get very lost when you switch to Nyquist and state, “This effects the slower sampling rates more than for higher ones.”…as far as I’m aware it holds true at all sample rates.

We’ll get to the bottom of this one way or the other. Recall, the original issue is whether moving to 24-bits provides more discrete sample amplitudes within the same signal. Something that I insisted it does not. John Siau’s contribution to the discussion agrees that it only improves the noise performance. There is more dynamic range in a 24-bit system…not more resolution.

Mark

“24-bit word lengths provide a very efficient method of improving the noise performance of digital systems.”

should read:

“24-bit word lengths, when sampled rapidly, produce noise at higher frequency which is more easily managed”

the statement:

“All quantization errors in a properly dithered digital system produce a random noise signal.

Properly dithered digital systems have infinite amplitude resolution”

should read:

“A properly dithered 16 bit recording improves its output sound by marginally and randomly cancelling distortion caused by quatization errors.”

This is done by adding random noise to the input. This works better on a particularly badly produced recording.”

Here we talking about the sound distortion here not the noise.

The phrase:

“infinite amplitude resolution”? Really, which rabbit hold did we fall down? The problem is more likely to show its self with 16 bits or less.

Dither addresses the important consideration is of distortion reduction not noise. The mind picture painted by the original statement is that “add a whole lot of noise to the input and then you got HD”. really?

The statement:

“Long word lengths do not improve the “resolution” of digital systems, they only improve the noise performance.”

should read:

“Long word lengths do provide better resolution of the recorded signal when it is rapidly sampled. This is because of more, and smaller, and more accurate quantization steps.

The resultant noise is easier to manage.”

Perhaps a good reason to want go over to HD in the first place.

Moving on:

The term:

“Quantization noise is an artifact of PCM sampling”

should read:

“Quantization noise is an artifact of digital sampling” Care should be taken with term “PCM”

The statement:

“Dither is used to turn severe and specific noise into less objectionable random noise…that is one of the main reasons for the necessity of dither.”

should read:

“Dither is used to reduce severe distorted sound outputted into less objectionable sound by randomly cancelling out distortion caused by inaccurate quantization”

The statement

“When done correctly the resulting digitized and reproduced wave don’t have “amplitude levels” at all…not 65 K or 16 million.”.

“should read:

“The output signal has a large number of quantisation steps which are much more numerious and precise with 24 bits and fast sampling.”.

Using the absolute number of quantisization values tends to be irrelevant because very many possible values would turn out to be not needed statistically.

You have explained clipping consequences many times. One of the fasinating thing about DSP is that on is dealing with statistics not simple numbers.

The number of of actual values is much lower than one would think. This could be pratically determined by analysing the utilised bits on a 1000 or more HD recordings. There is probably a statistics calculation trick available. Interesting but pointless.

Those so called theoretical values are based on the digital word sizes but should be halved anyway because of polarity.

The recording or play back systems are not necessarity that precise during sampling or playback as we would like them to be anyway.

The numbers bandied arround are very misleading. How often is music recorded at peak to peak at full volume in its entirety? Therafter carefully amplifed to max volume to use maximum quatisization. Silly question.

One could generate an awfull sound wave in the lab and quantise it once it was amplified to the max without clipping. (difficult)

This would be useless and potentially damaging.

The Nyquist frequency is usually a red herring. Baffeling us with scientific terms as you put in a previous blog. It is a curiousity and of historic interest.

The scientists at both Sony and Philips originally struggled over the issue during the specifation of CD’s (red book). They statistically selected the most unlikely freqency to occur in reality and doubled it to get the sample rate. (44.1) plus the other decimals.

Every time you double the sampling rate you half the already small likelyhood of an alias wave occuring and religate it to insignificance. The term is bandied arround like some kind of dreaded disease.

Even top CD players used to attempt to eliminate this with double sampling rates and up sampling to 20 bits. This was done automatically and electronically.

This would give less noise and remove that alias frequency threat.

In the HD arena, the term (Nyquist) should really be thrown away so to not unecessarily confuse the masses.

We have a few subjects foating arround here. The x and y notations are historically used over hundreds of years.

If a graph is rotated by 90 degrees its is likely that more confort woud be got by swapping the axis notation. The could explain what DAWS is doing, I don’t know.

My only take is that if you look after the important signal shape and the playback sound wave, all the embedded harmonics, and fine details will look after them selves. Shape is important!

The detail is in the precision of the encoding with many bits and rapid sampling. I agee that noise is far easier to remove with increased bits and fast sampling.

One has to emphasize that both of values (bits + sample) and are needed together for HD, hence my quip about SACD (ie DSD64) only doing half the job.

I have an aversion to the term “noise performance” who wants it anyway. In a sports car perhaps?

Neil, thanks for taking the time to put forth your position and explain your objections. I must say, I don’t regard the original statements as being in conflict with your own. The “very wrong” attribution was hyperbole at best. I do appreciate the alternative viewpoint but see the issue essentially the same as before. I’m planning on writing a series of articles on the details presented in those first few statements…I’ll keep you posted.

Audio Myth – “24-bit Audio Has More Resolution Than 16-bit Audio”

The “very wrong” attribution was hyperbole at best. – not so, I don’t believe this.

Every statement is challenged there. I am saying every statement is wrong and I am saying the exact opposite of everything presented.

I am also saying the “Myth” is NOT a myth. I am still not ready to delete my mythical HD recordings yet, or down sample them either.

I am either “very wrong” or correct. Time will tell.

There are no citations available in either case yet.

Thanks Neil…I’m afraid I’m going to remain on the John Siau side of this issue. He is not “very wrong”.

The article by John Siau is quite right as per my understanding, which is the conventional understanding. What we are really learning here is how entrenched the misunderstanding is.

Mark

Sorry, I ended up in a tailspin yesterday.

On further thought I realised that I had already tripped over the answer.

The answer us so simple and needs few words. I think I heard you sigh with relief!

John’s article was couched in too much jargon and provocative wording.

The aim with statement below is to (hopefully) be more easily understood and also not to scare away people interested in the new HD techologies.

The answer follows:-

“The 24bit digital playback system caters for a very large number of digital values that extends way beyond current digital technology capabilities found in current recording studios or home players. The interface readily provides for future technical improvements while maintaining continued compatibly with previous recordings. The number of bits used is actually set by the precision of the studio digitizing process and is fixed at the time of recording. The unused [less significant] bits are usually randomly toggled to reduce possible quantification errors or to smooth out digitization noise on the player equipment. The process is known as dithering.”

Thanks Neil…I got a response from John this morning. I’ve asked him for his permission to post it. Stay tuned.

Mark

Promise to keep it short. Some of the comments are added for other general readers. I have done extensive research on the subject and now believe John’s statement about “24 bits not improving resolution over 16 bits” to be 100% correct bit with one proviso. We have all skirted around one important issue.

Most of us connect our systems up with HDMI, SPDIFF or Toslink cables which is mainly for convenience or budgetary reasons. These are the connection cables carrying a series of one bit pulses framed in a set and universal format. This is know to us as a LPCM signal for what are discussing here. The format is identical in both 16 and 24 bit cases. The most significant bits encode up to 24 levels for 24 ‘bit’ achieving greater dynamic range, but only toggle only to 16 levels for 16 ‘bit’. The less significant bits in the stream will provide the small number of sub graduations but only up to a predetermined number of combinations possible by the format. The combination of possible sub graduations remains the same for both types. (16 & 24 bits)

An example would be 16 x 32 = 512 graduations for 16 bit, but increasing to 24 x 32= 768 levels for 24 bits having a better dynamic range. Note again that the sub graduation resolution is the same for both. Caveat: This calculation still needs checking!

The mass confusion is caused by the fact that both FLAC and WAV(RIFF) files actually do hold all of the 16 or 24 bits, though many of these are really inert. This gives the readers of articles covering audio download files, the false expectations from an enormously exaggerated range of combinations possible. This is far higher than that currently available and possibly will ever be.

An “ultra” high resolution recording which has more bits encoded than normal, will still sound the same (as standard recording) when pushed down a normal LPCM line. The widely documented file formats give all false expectations of exactly what the files can achieve in a normal setting.

Such “Ultra” HD recordings when and if they become available would need a configuration like you, Mark, enjoy with high quality DACs with the audio output feed directly into a good amplifier and speakers.

The general reader should also be alerted to the fact that these hifi cables like HDMI can and do carry other types of signal as well like DTS when playing the music and sound from movies from DTS recorded disks and of course also several other formats. It also connects video. HDMI is truly a universal cable but also with its own limitations.

Neil…I’m glad. I’ll have to dig into your post a little more. I guess what you’ve saying relates to the practical realities of sending bit down and AES-EBU digital line. These lines can and do carry both 16 and 24 bits…I’m having trouble with the “sub gradations” part of your discussion. I’ll get back to you.

There’s a lot of great information in the article by Mr. Siau, and I’ll return to it in a second.

You wrote, “In its simplest form, the issue is whether adding 8 additional bits to each of the sample values in a PCM system increases the “resolution” of the system by providing more discrete values over the same total audio signal OR do the additional discrete values provide greater dynamic range by extending the range of the system.”

This is a nonissue; both statements are true. A higher bit depth system does offer more discrete values in the same voltage range, and that allows for increased dynamic range — provided other noise in the system is sufficiently low. Those additional levels exist in the digital representation of the signal, but you don’t see them in the output of a DAC — unless of the non-oversampling type –, because they are removed by a low pass filter .

I’m still not sure what definition of resolution you are using that introduces any contradiction in the above statement. When scientists and engineers talk about the resolution of a measurement system, it generally refers to the smallest change in the measured quantity — in this case the voltage amplitude — that produces a change in output of the measurement device. Put another way, it’s the smallest difference the device allows you to measure — a meter stick marked off in millimeters has better resolution than one marked off in centimeters.

(You are quite right that pixel density is not a good analogy for audio bit depth. It’s a much better analogy to audio sample rate, but let’s leave that for a different day.)

That leads me back to Siau’s article, and the assertion of infinite resolution. Whether analog or properly dithered digital, the resolution is limited by noise.

To draw an analogy, imagine using a ruler to measure the height of some object, but the object is randomly moving up and down — this is noise. Even if the ruler has infinite resolution — no limit to how small the gradations go — there is still uncertainty in the measurement.

A digital system without dither is equivalent to a ruler of finite resolution. Imagine that it is marked off in centimeters, and the random fluctuations in height of the object are far less than 1 cm. Rounding the measurement to the nearest centimeter introduces an error that we call quantization distortion.

Adding sufficient noise — whether well-defined dither or the near-random fluctuations of some analog circuits — eliminates quantization distortion. It can also appear to increase resolution when averaging the recorded values of a continuous or periodic function over a sufficient number of samples. In that case, however, it becomes a statistical rather than a deterministic system. Changes in level that are smaller than the least significant bit and are not continuous nor periodic cannot be extracted from the noise.

Returning to the ruler analogy, dither shakes the object more so that the difference in its observable height is greater than 1 cm. If we average enough measurements, we can get a pretty accurate value of the object’s height. If we only have a few measurements, our measurement is not so good. Measuring the less shaky object with a device marked off in millimeters, though still having some uncertainty, gives a more representative result over few measurements.

Music signals are comprised, for the most part, of periodic functions that exist over a long enough time to be adequately reconstructed by a properly dithered 16-bit system sampling at 44.1 kS/s or, perhaps, somewhat faster. The beginnings of notes, on the other hand, can be shown to require higher bit depths and/or sample rates to be accurately represented. How much information is required for a listener not to perceive a difference between the direct and digitized signals, or to not be able to distinguish the difference from adding bits (within the bounds dictated by other noise sources) or increasing the sample rate, is something I think we would all agree is worth further study.

Thanks for the extensive and thoughtful comments…I think we’re on the same page here. Our starting perspective has consistently been at odds, it seems. I think it’s pretty clear…thanks to you input and John Siau’s article.

AES-EBU: Yes and an interesting subject on the electronics side. We are dealing with pulse signals that must have their own bandwidths and cable lengths catered for.

These can transfer any suitable signals like PCM (we use LPCM) and DSD and others. Each of these needs a separate line for multi channel relay but can also use multiplexed coding systems like DTS and DD that share lines.

The LPCM encoding method and format is universal for both 16 or 24 bits hence the confusion over “resolution”.

The number bits in the signal frame are fixed though the top pattern for 24 or 16 is different in that they need the same number of bits to do the job. (There are fewer than 24 binary bits available the LPCM encoding frame any way)

The issue is that there must be something more refined that just 16 or 24 levels of loudness in 6db steps. There would be a crude progression of:

For 24 bits:

0, 6, 12, 18, 24, 30, 36, 42, 48, 54, 60, 66, 72, 78, 84, 90, 96, 102, 108, 114, 120, 126, 132, 138, 144 Db

If these were only the levels required the audio file holding a recording could be compressed way down beyond even MP3 possibilities.

Note that a 16 “bit” signal would still need 5 encoding bits to rise to 1 of 16 levels. 1 of 24 codes for 24 “bits” using the same 5 encoding bits.

The term “sub-graduation” is possibly quite confusing here because it was stolen from a different scientific field.

I am saying the those 6 Db steps must be refined to some smaller parts like being able to step up to different values like 5 Db or 5.5 Db.

Note a previous notion that here could be millions sub levels is ludicrous because our hearing could never be that refined anyway. (You mentioned this before)

I believe that there are obviously far less than some think but still guess that this could only 32 fractions of the 6 Db levels. Here I could still be wrong because further research is needed.

This is covering what happens during replay at home and not in the studio during processing.

This is further to what I tried to add earlier. Getting deeper into the AES bit frame format is leading me to the idea that my original misunderstanding was based on a few misconceptions.

The way that the bits in the frame are laid clearly define the full bit pattern of the signal leading me back to square one.

I was trying to move in the same direction as John on the idea that the resolution is its self is not improved by going from 16 to 24 bits.

Further research and study may eventually help navigate myself through this mine field.

I now believe again that the resolution could be improved but only if the recording recording equipment could provide it. The playback DAC’s can never provide anything better than the studio.

In reality studio ADC’s probably don’t provide any where near 65 thousand levels in the first place which would mean that the additional 8 bits would be inert making the original point about resolution true.

Probably HD audio comes from faster sampling and better dynamic range with no resolution improvements available. The term “resolution” should be avoided by persons promoting HD audio.

The study goes on but I do eagerly await John’s response.

I got some information back from John…I will reach out to him again, and get an update.

I know that both 16 and 24-bit signals are accommodated using the AES-EBU standard with the 80 bits allocated to each packet or word. However, I think there is some confusion about how encoding of an analog signal to PCM digital works. The Nyquist Theorem is still a valid component of the digitization picture AND we can achieve 6 dB per bit in the dynamic range side of the process. This does not translate to an actual sequence of multiples of 6 from 0 to 144 as you seem to indicate in your post. The “grid of samples and amplitudes” allows us to capture (with quantization errors and dither applied) and store the analog signal in a digital form. And then we can process that data back to the exact analog signal that we started with. So I not with you on the sub-gradation of the signal. We already have all of the intermediate “sub” steps through this process.

Here’s some comments from John Siau on the topic:

“Each sample should be looked at as an impulse. Upsampling DACs insert 0-amplitude samples between each actual sample. An upsampling ratio of 256 inserts 255 0-value samples between each successive input sample. This series of impulses (most of which are 0) feed into a sin(x)/x reconstruction filter. The result is a high sample rate waveform containing the original signal plus some high frequency noise. A zero order hold is often unavoidable, but this typically occurs at 256Fs in an oversampled DAC. This implies that the noise produced by the zero-order hold starts near 256/2 * the original sample rate. This high-frequency noise is well above the Nyquist frequency of the original samples and is easily removed with a simple analog low-pass filter. The end result is a continuous band-limited waveform that is an exact replica of any band-limited input waveform. All of the remaining noise is a result of quantization errors and dither noise. If dither was properly applied before quantizing in the A/D, the errors will be random. The end result is the original continuous waveform plus white noise, where the white noise is not correlated with the audio.”

Thank you for this article . When you start looking at the practical aspects of analog to digital it becomes even more obvious that bit “depth” contributes nothing to the accuracy of the waveform reproduced by the DAC, when you look at these with an O-scope its a wonder we hear anything remotely like the original sound.

Here’s how I got there:

16 bits offers 96db dynamic range

24 bits offers 144db dynamic range

Record albums are ~60db dynamic range (liberal)

Reel-to-reel ~70db dynamic range (liberal)

60db/96db = .625 x 16bits = 10 bits

60db/144db =~ .416667 x 24 bits = 10 bits

70db/96db =~.729167 x 16bits = 12 bits

70db/144db =~ .486111 x 24 bits = 12 bits

So, regardless of available bit depth, 99.9999% of music can be represented by 20khz that need to be represented, rather its the signal variations of <= 20 khz tones in music that need more samples to represent.

Try it yourself. Listen to a crowded passage of music with a lot of cymbals. As sample rates increase, not only is it easier to listen to a single instrument, but the cymbals themselves retain their timbre and donic character, whereas at lower rates, they’re lost in a cacophony much like the sound of an open, high pressure air hose.

You’ve discovered what I have been saying for a long time. Thanks