Time Aligning Waveforms

I just finished going through the stack of emails that needed my attention and responded to a bunch of comments on some recent posts. The 16-bits vs. 24-bits debate continues to draw comments. I’m planning on going through the John Siau article in detail with illustrations and possibly even some animations to reinforce the concepts that he so skillfully wrote about. It’s proven to be a difficult subject to explain and for many to grasp…stay tuned.

I got a curious email the other day regarding the idea that my recordings are not worthy of evaluation over at the AVS Forum’s CD vs. HD challenge that Scott Wilkinson and I concocted. In spite of our insistence that the test is neither scientific or the results indicative of anything, it’s received a lot of attention, over 2600 comments, and stark positions on both sides of the issue. We’re planning a “Home Theater Geek” podcast to go over the challenge and talk about other current high-resolution topics in early September. He had asked whether some of his audio writer associates would care to take the challenge. One of them sent along a question that seems somewhat curious.

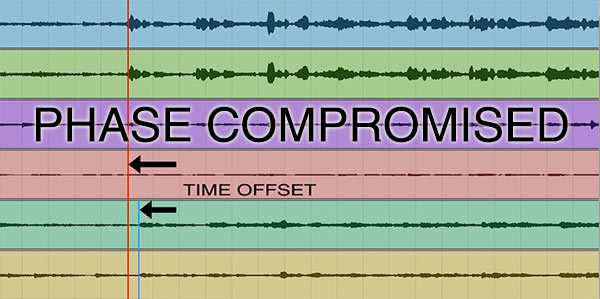

The author wrote, “I’ve got most of the AIX Records and I’ve met Mark and discussed his micing technique. He uses multiple stereo pairs. He then ‘time aligns’ the waveform so the impulses line up, which is what most multi-mic recording engineers do during the editing process. But aligning the impulse attacks does not phase align the sound.”

Because of this, he declined Scott’s request to get involved. His reasoning was that my tracks were too “phase compromised” to be of any use in an A | B comparison.

Phase “compromised” is a description that I’ve never heard before. It sounds a little like a disease that audio recordings can fall prey to if the recording engineer is not careful. Honestly, I can only imagine what the author was thinking. But I can assure him and the rest of my customers and readers that I do not “time align” the waveforms or audio tracks associated with any of the tracks that I capture…stereo pairs or not. And while I am familiar with the process of aligning tracks in commercial recordings, it’s not a procedure that “most multi-mic recording engineers do.”

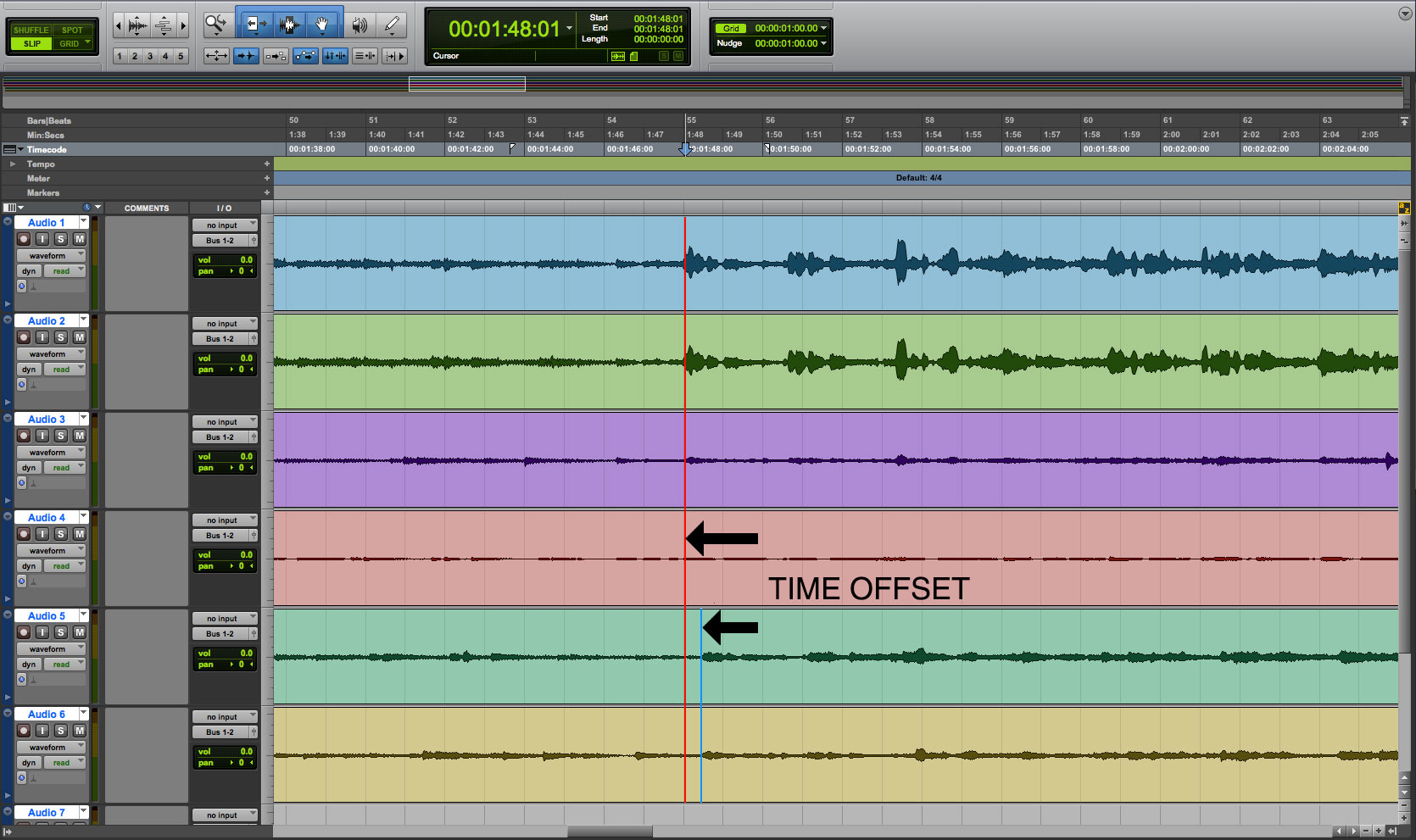

Imagine the following scenario. You’ve been hired to record a concerto (a concerto features a soloist or soloists in combination with a full symphony orchestra i.e. Beethoven’s Violin Concerto). Traditional miking technique would have you place a stereo pair (Blumlein or ORTF or spaced omnis) above the orchestra about 15 feet behind the conductor. You might also place a dedicated mic or stereo pair near the soloist. Because the two pairs of mics are some distance apart, the signals from the close mics and more distant pair will not be “time coherent”. It takes more time for the sound to reach the farther pair of mics.

Figure 1 – The is the track layout of a Pro Tools session showing the “delay” of a stereo pair due to distance. Some engineers would manually move the tracks…I leave them alone. [Click to enlarge]

When summed electrically during a mixdown session, the difference in time will cause a very slight phase shift and “muddy” the sound. So in somewhat rare occasions, audio engineers will manually align the signals coming from the different locations. I don’t.

I use a large number of stereo pairs and mono microphones placed quite close to the source instruments or voices. They are all about the same distance from the source sounds and therefore are “time coherent” with regards to each other and don’t require or need any “time aligning”. The omnidirectional microphones at the back of the hall are the only ones that might have some timing issues but they are used for reverberation and don’t compromise the phase of the main source tracks.

Even if I did manually adjust the alignment of my stereo signals, it still wouldn’t nullify using my recordings for a comparison between CD resolution and HD-Audio specs. I don’t understand why the author of the question just didn’t politely decline Scott’s invitation to participate rather than fabricate a flimsy excuse…one that isn’t true.

Mark,

Clearly the person who was objecting to your recordings being “phase-compromised” (not a term I’ve heard before either in my 40+ years in pro audio) has very little if any experience in recording. Your method of aligning arrival times should be just fine, better than most, so long as the correct attacks are used, and I’m sure they are. Any time there are multiple mics in a sound field there will be multiple arrivals, and in some cases, those are very useful in creating a believable recording. Believable, but…ahem…phase compromised. Sheesh.

We could also talk about the fact that above a certain mid-band transitional frequency human hearing localizes sources based on the arrival time and intensity differential portions of the HRTF because the wavelengths of the frequencies involved are far shorter than the interaural distance, so phase would be ambiguous, even in real life with just two ears. So our own human hearing is at some point “phase-compromised”. Your goal is to capture that phase-compromised audio with all possible accuracy. Seems that goal has been met.

However, I do think the experiment was interesting to a point. I’m not sure it proved much because of the lack of control, and the “gaming” issues, and lack of a universal and properly done ABX comparator.

I do think the one result that surfaced above all others was the fact that the “AVS” in AVS Forum stands for “Arguing Very Strongly”. A fact that has been tested and confirmed countless times before, and has just been again.

I was quite surprised by this assessment as well. I capture the signals coming in from a bunch of carefully places microphones and move ahead. I don’t change the phase of anything. The AVS Forum loves to argue and post…I’m looking forward to being on Scott’s show in September to talk about the “tests”.

How do the musicians stay in time with each other if they are an appreciable distance apart and have no local monitoring (ie IEMs, Headphones etc). I notice on some of your recordings there can be quite a large ensemble who can be spaced out quite a bit (to avoid microphone bleed I assume) yet appear to have no local monotoring.

I don’t deliberately space the musicians far apart…the space and the ensemble sort of manage that themselves. I don’t try to place the rhythm section close to each other for the best musical results…getting the groove is critically important. The rest of the musicians just listen. I have on some occasions used IEMs.

Consider the difficulty of recording an artist live (or maybe in the studio). If we are to get overly concerned with phase alignment, what are we to do about pitch as a saxophone great moves his wailin’ sax about? Should we correct for the Doppler effect? Does it add or subtract to the performance? Is it even noticeable? Correct just for the lead or for all acoustic instruments moving about relative to their mike?

“Sometimes better is the enemy of good enough.”

Seems like a red herring argument from the emailer for this case. Time alignment needs to be the same between differently sampled versions of a song if one is trying to compare them so that differences in timing don’t give away which song is which in a blind test, but that’s about it as far as I understand time alignment in this situation.

But in general, “phase” has somewhat baffled me, so I have some sympathy for the emailer in not understanding something. In my simple way I thought “phase” was another way to refer to timing differences between sinusoidal signals (for example, in AC power calculations), but when Ethan Winer and JJ Johnston stated that our ears are relatively insensitive to phase differences, I frankly never spent the time to pay attention to what they were stating and understand it. There is also the aspect that speaker manufacturers sometimes refer to as “phase coherence”, but rightly or wrongly I thought that just meant ensuring that the combo of the speaker enclosure and crossover shouldn’t create timing problems reproducing the different frequency components of an instrument. That stated, given the bouncing around of sounds in a room and frequently not sitting exactly between my speakers and still hearing things OK (some losses to stereo imaging, but no muddiness to the music), the problem of a lack of perfect “phase coherence” and “phase” in general are things not high on my worry radar.

So for me and perhaps others, understanding “phase” could be mostly laziness (definitely for me anyway), but it could also be that “phase” is a term used a bit differently by audio industry players in different situations or perhaps the same way but with different assumptions that are not clearly stated. Whatever the case, if the music sounds really good, and yours always does, I stop worrying about how you’re doing it.

The two versions of the songs…A and B…are the same stereo mix with only the sample rate and word length changed. The timing “compromise” is indeed a red herring.

If I properly understand the description of your technique, it’s almost equivalent and result to lining up the impulses from each channel, in that The time for the beginning of a note to hit the microphone will be about the same for each instrument. Is this the correct interpretation so far?

If so, the phase relationships of those signals will be different than for a listener at the conductor or audience position. The natural setting\would have a delay between, for example, the first violins and the woodwinds, with an even longer delay for the brass and the percussion section. If you do not correct for these delays, the time, and therefore phase, relationships between instruments will be different than those experienced by a listener in the hall.

The corrections would, of course, be different for your various mixes. Maybe you are making similar adjustments, but would describe them differently.

I only ever bought one AIX DVD-Audio — a couple of Mozart symphonies, I believe. The soundstage seemed rather flat to me, which would be consistent with altering the timing/phase relationships, as I just described.

I’m almost exclusively a stereo listener, so soundstage depth is important to me. The phase relationships would be both less important, and more similar to the tracks you are laying down, with the middle-of-the-action perspective you seem to favor.

Better preserved timing and phase relationships is one of the theoretical benefits of higher sample rates, so that may be what the commentor had in mind. Still, he should get his facts straight about your process. And, ultimately, you’re recording, mixing, and mastering techniques are a matter of artistic expression. To that extent, the way you want them is the way they should be.

In any case, it’s great that the test generated so much interest.

Andrea…I think your description of my recording technique is reasonable enough. If you’d had a chance to view some of the videos of my work, you would see the microphones placed all around the ensemble…usually in stereo pairs and close to the instruments. This minimizes that effect of timing or phase problems but it does mean that the actual experience of the recorded sound is different that what a conductor would hear. I make no adjustments for distance as alleged by the author of the email.

It’s interesting that you have the Mozart recording and find it flat. A review of the Bach Brandenburg from the same sessions was lauded for being the “first time I’ve heard great depth into the sections” because of the multiple stereo mikes that I employed. Having not altered the timing/phase relationships makes this possible.

When I say it sounded flat, I mean that the various sections of the orchestra sounded as though they were roughly the same distance away from me, rather than one being behind the other. I see how the enhanced detail of your close-miking approach could be described as “hearing great depth into the sections” — one could, for instance, presumably hear more of what the violas were doing than on a recording using only a few microphones further back. Here, I’m using depth in the context of spatial perception, which may be different from what that reviewer intended.

As some of the other commenters have pointed out, the extent to which phase relationships are audible is another one of those open issues. Most research indicates it’s not terribly important. That doesn’t mean it’s not worth thinking about.

Phase is another area of audio and hearing that could use some additional research. I agree.

Human hearings sensitivity to arrival phase quickly diminishes once the phase differential is enough that determining lead/lag becomes ambiguous. That differential is a function of frequency and arrival angle relative to directly in front. However, as phase becomes less a factor in determining source location, arrival time itself, determined by differential between attack arrival, takes over, and the difference in the frequency response part of the HRTF is dominant already at that point.

It seems pretty clear that once any arrival time anomalies caused by physical mic spacing have been corrected for (and the need for that would be somewhat subjective), the job’s been done.

The idea that something is “phase-compromised” is simply the result of someone’s lack of understanding of phase and human hearing.

Very nicely put. Thanks.

The “phase-compromised” guy could also be a binaural guy thinking that binaural is the only pure way to capture natural phase. Ignoring, of course, the big problems in binaural, in particular the fact that HRFT if unique to the individual, and can only be generalized for everyone, thus making every binaural recording a “compromise”.

My gut reaction is that the guy was looking for an excuse not to participate out of fear that he wouldn’t be able to tell the CD vs. HD files apart. I’m just guess however.

hello mr.Mark

I’m quite happy because I was able to “solve” the test straight away on the first run which means:

i’ve good ears 🙂

and a good system altough a very modest one (but I live happy with it)

For those who say there’ s no noticeable difference if I may a couple of suggestions:

– volume pretty high (not too much)

– first 30 seconds are enough, if you can’t hear the difference at the start then you wont be able either later

– dont concentrate on the notes, instead close your eyes and let the music comes to you

once you reach this state of mind the difference become more obvious

Of course if you’re not carefully listening (say you’re browsing the net) then it is almost impossible

because the notes are the same and the overall impact is really close but..

the hd version have

on the street more energy

mosaic shakers more natural and “alive”

just my imagination is just more musical, vinil kind of way

the cd version at first do seems the be the same but its a colder presentation

for me HD makes a lot of sense!

sorry for my poor english (italy..) and thanks again to mr. Mark for the effortless contribution to this amazing hobby keep going!

best regards

Andrea

ps

my system

laptop with tons of tweaks applied for maximum music enjoyment

HRT II+ dac

old pioneer SA-710 with some tweaks applied

twisted OCF cables and no brand signal ones

blacknoise filters and a dedicated power line

Thanks for the observations…and positive feedback.