Listening to Dynamic Music – HD-Audio Challenge II

The HD-Audio Challenge II has launched. Over 250 audio enthusiasts have already signed up and more are signing up everyday. Please feel free to encourage others to participate in this new research project by linking to this page. Anyone interested should submit their information using the form below:

Last week, Dropbox informed me that they have banned my account because I exceeded my daily download allotment. So I moved all of the files to a Google Drive and have been sending the link to everyone that has signed up. If you haven’t received the link, please be patient. I’m adding about 25-30 people every day to avoid swamping the Google account. Once you have the files, please let me know.

Experiencing Real World Dynamic Range

I received the following from a reader after he played a few of the terst files downloaded from the AIX Records catalog. He wrote, “Must admit I do struggle with levels though. Like a couple of recordings I have purchased from AIX in the past, they seem SO low. I appreciate that avoiding compression will reduce the average level but there is such a difference it does make me wonder. My amp has an accurate volume scale in dB and your tracks seem to be about 12-15 dB down in comparison. With relatively inefficient speakers (Quad electrostatics) I am running out of gain from my 200w amp.“

This comment is not unexpected or uncommon. So I thought I would speak to the issue of live vs. reproduced dynamic range briefly in this post. Recordings that accurately reflect the dynamics of the original performance will undoubtedly sound much quieter than most of the other albums you’ve experienced — the RMS (Root Mean Squared although think average and you get the gist) amplitude is much lower. Unfortunately, virtually ALL of the commercially released recordings have had their original dynamics modified — and not to the benefit of the original performance. This procedure is standard operating procedure during the mastering phase of a production. Mastering engineers employ sophisticated analog and/or digital compressor/limiters to lessen the dynamic range of the source mixes. Amplitude levels exceeding a specific threshold are attenuated by a user defined ratio — typically 4:1 to a crushing 20:1. The result removes all or most of the dynamic variations present in the original music and results in an “all loud all of the time” recording, which is decidedly unmusical and unnatural.

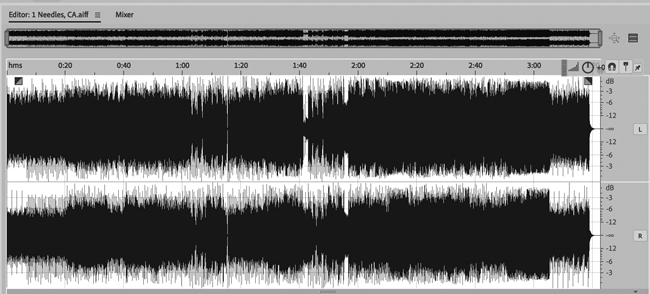

The diagram below was ripped from a CD release:

The dynamic range of the track above registers at 48 dB, which can be completely defined by about 8-bits! Why is everyone promoting 24-bit files for playing back music files when the material we listen to doesn’t even use 25% of that potential.

Understanding Compression and Normalization

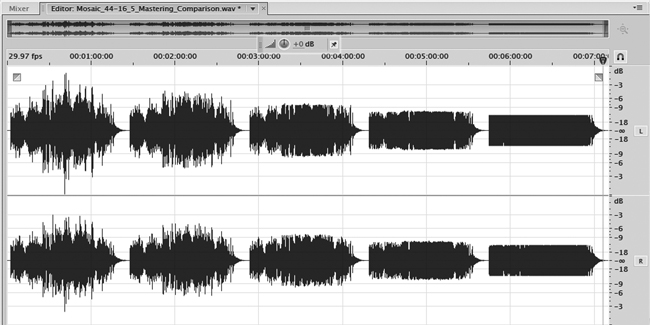

Take a look a the following diagram from the Music and Audio: A User Guide to Better Sound. It shows the progressive application of more and more compression applied to an unprocessed source recording (from my own catalog).

The natural dynamics of this acoustic guitar, bass, and percussion recording is on the left. The PCM 24-bit word length allows recording engineers greater flexibility in capturing music that has lots of wide dynamics. Each of the other four versions show increasing amounts of compression applied to the original file. The one on the right hand edge is typical of commercial recordings. However, the overall level has been severely reduced. Segments of the original track with amplitudes above a specific threshold (typically -12 to -14 dB) are attenuated. However, the mastering engineer is not finished mucking with the dynamics — it gets worse.

The level of the plateau on the heavily compressed version is about 12 dB down from the potential maximum of this file as defined by the word length. Notice the single moment in the left channel (the top one) in the original uncompressed track that reaches to the absolute peak of this file near the beginning. That is the loudest moment in the track. If left in the recording (as audiophiles would and should prefer), the full range of dynamics are captured and can be reproduced. The four mastered versions progressively remove these musical variations in amplitude — performance subtleties painstakingly practiced by skill musicians and deliberately included in their performances. The compressed version plays great on the radio but is very much lacking for those seeking real world, natural musical dynamics.

The final process a mastering engineer does after compressing a track is to maximize the amplitude potential of the track in the 16 or 24-bit file container. This process is called normalization and it does not restore the previously removed dynamic range. It only makes the flattened file louder — a lot louder. In the example above it increases the amplitude by about 12 dB!

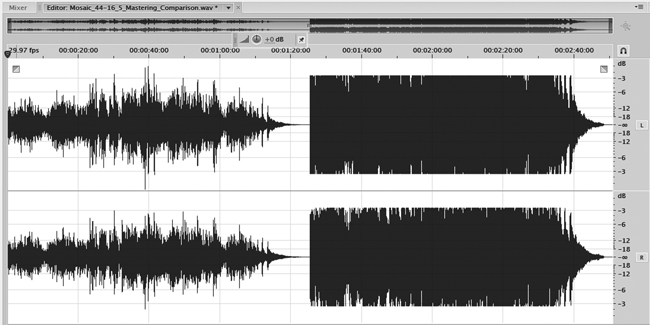

The illustration below shows the original uncompressed track and again after the compression AND normalization processes have been applied.

Guess which one will sound “punchier”, louder, and have more commercial appeal for those listening on radio or streamed services?

Normalization is a two step process. The normalization algorithm scans the file looking for the single digital word with the highest value — the loudest amplitude. The second pass then amplifies all of the digital words by the difference between the highest scanned value (the first pass) and the maximum value allowed in the 24-bit (or 16-bit) container.

If a mastering engineer normalizes the original — and very dynamic — tracks the dynamic contour is maintained. The single loudest moment will light up the 24th bit in the PCM word. The dynamics will be wide and natural. However, if normalization is applied to a track that has been heavily compressed, ALL of the digital words approach the maximum value of the file. This is great for making your track pop out of the radio speaker but undesirable for enjoying a musical performance.

The files that are part of the HD-Audio Challenge II have not been compressed or normalized. All of the selections come from the AIX Records catalog and maintain the original dynamics as performed by the musicians and singers during the sessions. I believe this is what audiophiles want when they playback an album or track. Sadly, this is not what most record labels offer to their customers. We experience compressed and normalized files because that’s what generates the most profits for the labels.

So when you listen to the tracks for the HD-Audio Challenge II, you will undoubtedly have to turn up the volume on your preamplifier when listening to them. If you have a good preamp (with lots of dynamic range), a capable amplifier, and a quiet listening room, you will experience real world dynamics — perhaps for the first time! Be sure you turn down the volume on your preamp before playing a commercial album or mastered source or you may get blown out.

There’s a lot more to say about recording dynamics, noise floor, “micro dynamics”, “low level details” amplifiers, and speakers but I’ll leave that discussion for the book or another article. However, the explanation above may influence your listening during the HD-Audio Challenge or at least make you realize that most of your listening can be contained in far fewer bits. A heavily compressed and normalized track typically requires between 8 and 12-bits. It should make you wonder about the dubious claims by download services, streaming companies, and equipment vendors that want you to believe that more bits means better listening. Moving to 32 or even 24 bit is completely unnecessary for normal music listening.

Making Friends at the Audio Shows and on the Plane

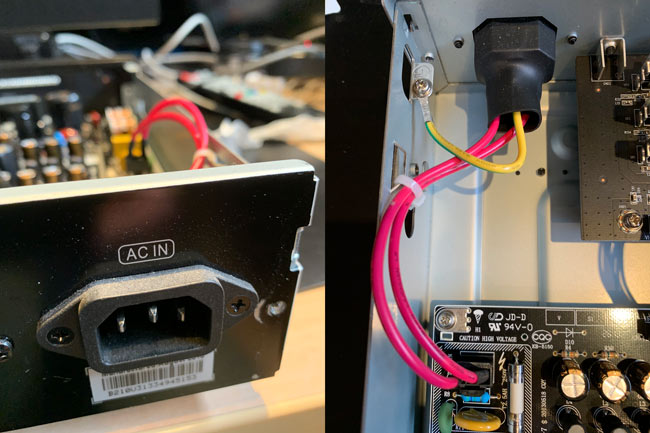

I wanted to relate a few brief stories collected while I was on the east coast at the CAF and NY Audio Show. The first was a brief — very brief — conversation I had with a younger gentleman that was probably an employee of a cable company or other accessories vendor. I saw him at both shows. He engaged me when he overheard me dismissing the ridiculous claims made by cable companies about power cords. You may remember the picture below of the inside of one of my Oppo Blu-ray players. It shows the internal wiring of my Oppo Blu-ray player — simple 12 gauge stranded wire, which plugs into the power supply circuit board with its thin copper traces. There is no way that an expensive, boa constrictor sized power cord will cause any changes or enhancements to the fidelity of this player. If it did there is something wrong with the entire setup.

This gentleman insisted that power cords make “a 100% improvement” in the fidelity of a high-end audio system. He was flummoxed and upset at my assertion. So he asked, “I guess you also believe that a desktop computer or laptop playing music files sounds the same as a dedicated music server?” When I said yes I did believe that, he stormed off without a word. I’ve tested his assertion and confirmed that the same digital bits were delivered to my Benchmark DAC from a server and my MacBook Pro. And if the same bits are sent to a state-of-the-art DAC, which uses its own clock and converts the digital information back to an analog signal, then the same sound is produced.

The second conversation of note was something I overheard at the nearby display of historic phono cartridges and tone arms. A obviously strong advocate of vinyl LPs offered his opinion, “Digital audio will never equal the analog richness of good vinyl. CDs sounded harsh 40 years ago and they still sound harsh today.”

Finally, I should mention a conversation I had with a gentleman sitting next to me on the flight to DC. He was returning to his government job from an extended stay in Australia. We got talking about audio and it turned out that he does AI algorithm development for some research department. He went to grad school at MIT and appeared to be a very knowledgeable and intelligent individual. But when I started talking about my progression from analog to digital audio production, he interjected, “but all of those individual digital audio samples can never result in a smooth analog waveform, right?” I proceeded to share some basic information about sampling theory, the Shannon-Nyquist theorem, and reconstruction filters and ultimately he was convinced — but he still wants to keep listening to his vast vinyl LP collection. And so he should.

Holiday Savings on AIX Records & Music and Audio

Discounts have been extended until the end of November for the all AIX Records releases and the Music and Audio: A User Guide to Better Sound. Use COUPON CODE AIX191101 for 25% off all AIX Recording and MAAG191016 for 50% off the paperback version of the book. The holidays are fast approaching…shop now for the perfect audiophile gift.

Following the success at the audio trade shows in DC and NYC, quantities of the book are limited. Make sure you get your copy in time for the holidays.

Thanks as always and Happy Holidays!

The reader comment was “I do struggle with levels though… they seem SO low.“

I had kind of the opposite reaction and was actually surprised at how little volume the AIX tracks need, for uncompressed recordings that is. Sure, they require a higher volume setting than most music but I have numerous hi-res high-dynamic-range albums from the likes of Reference Recordings that require me to “turn it up” even more to get acceptable listening levels. These are full orchestra recordings and have different dynamic range requirements than the AIX releases I am comparing them to.

Oh, and the AIX recordings sound wonderful.

Interesting, thanks. Keith Johnson is a friend and terrific engineer. His work also allows real world dynamics to be preserved. It’s curious that his need more amplification however. I know he does use distant microphones.

Hi Mr Mark, for me its very interesting your article, but I have a doubt about the history you tell us over the sampling theorem, Mr Monty Monthgomery explain and probe clearly the concept in some of his videos and blog https://people.xiph.org/~xiphmont/demo/neil-young.html , that with 16/44.1 you can reconstruct precisely the original signal, but Mr Rob Watts from Chord Electronics, explain that the HI RES helps to reconstruct the original signal in a better way, because the neural discrimination delay in humans is a 4 mili seconds, and the time in 44.1khz sampling frequency between the samples is 22 mili seconds, he talk about the transients, even is posible to reconstruct the signal as analog continuum signal, there’s a error in timing and pitch, that transient can be before or after of the precise moment, and he explain that without any proves, just with graphics, I include here the link of a video and It would be interesting if you give us your opinion about this technique and about the temporal discrimination delay in human neural networks. https://www.youtube.com/watch?v=cgoOz6OP4_I&t=414s

Monty’s Digital Show & Tell video explains this pretty well – around 21 minutes into the video the timing precision vs. sample rate is discussed and demonstrated. Another concept that many don’t get is dither enabled reconstruction of sub-bit precision – see a quarter bit signal well above noise floor around 14 minute mark.

Seeing digital audio as a crude stair-stepped approximation following the bit depth x sample rate grid is fundamentally flawed. Reconstructed (band limited) signal is perfectly smooth with all original detail between the “grid lines”.

This is not to say that processing audio with a brickwall limiter will not reduce its loudness range.

All PCM digital recordings require filtering to remove ultrasonic frequencies generated during the DAC process. Are you saying that the use of these filters above 20 kHz impacts that loudness of the in band signal?