Meridian’s MQA: Swapping Rectangles for Triangles

You can call if what you want, but the new technology that Meridian announced last week is a data reduction technique that requires both encoding and decoding. In Robert Stuart’s AES paper he uses the term “lossy” and “lossless”…so you decide whether this new methodology is a codec or not.

But it very certainly is a technique for maintaining the benefits of high sample rate audio within a much smaller container. And the container can be a traditional PCM or FLAC file infused with metadata that allows for the expansion of the smaller file back to the original 192 kHz source file…the decoding side of the process.

Just how this works and the magnitude of improvement in terms of bandwidth and storage capacity is described in the AES paper, “A Hierarchical Approach to Archiving and Distribution” authored by Robert Stuart and Peter Craven. The bottom line on the distribution system is that a 192 kHz/24-bit original requiring 9.2 Mbps can be “encapsulated” using an average data rate of 922 kbps, which is well below the rate of a stereo 44.1 kHz/16-bit compact disc!

Getting high-resolution audio fidelity down to bitrates associated with CDs is quite a feat. From the discussion that Robert gave at the AES convention in Los Angeles, a careful reading of the previously mentioned paper, and the discussions/videos available on the Meridian website, I think I have a pretty good handle on MQA.

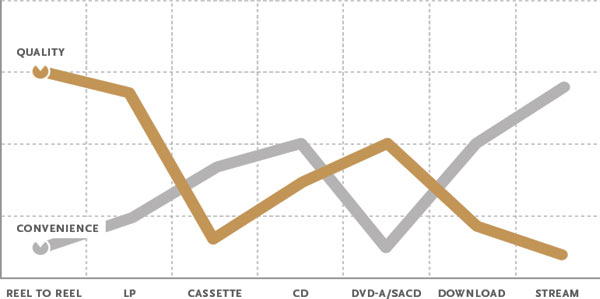

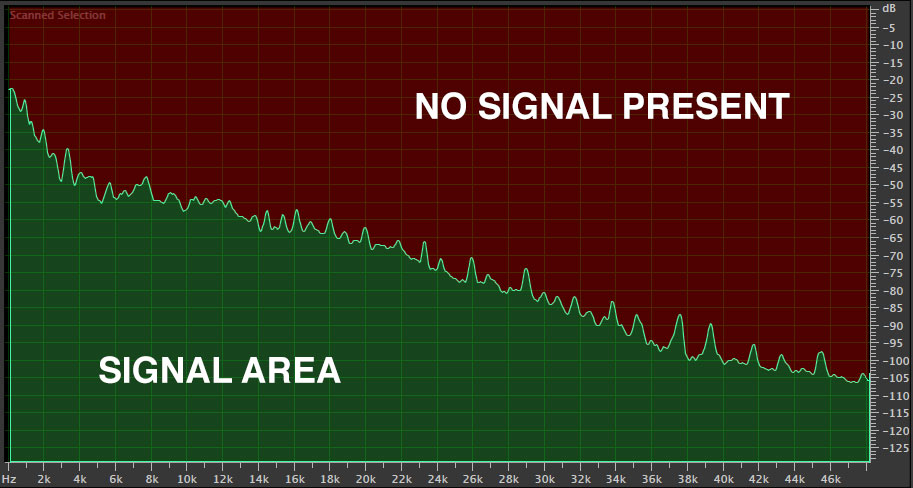

It’s all about switching from rectangles to triangles. I’ve included lots of graphics in previous posts that show diminishing intensity of signals as they increase in frequency (except using DSD). Take a look at the diagram below:

Figure 1 – This is a typical plot of intensity vs. frequency for a 96 kHz/24-bit PCM recording. [Click to enlarge]

I plotted one of my tracks to show that amplitude decrease as frequency increases. However, our current digital encoding schemes allocate the same number of samples and bits to each octave regardless of whether there is information there or not. Many customers of high-resolution digital music sites are spending their money on a bunch of digital zeros! The only plots that I’ve ever seen that have rising amounts of ultrasonic information were those derived from DSD recordings and even DXD tracks! And of course, that noise is the result of the in band noise generated by the 1-bit DSD system.

Meridian looked at a lot of these amplitude vs. frequency plots and said, “Why don’t we try to encode just the signal part of the music and ignore the empty space?” Instead of having a rectangular frequency vs. amplitude box generating large amount of data…much of which is empty…they came up with a system that focuses on just the audio. Of course, there’s a lot more to it than just using filter to remove the blank part of the plot, but this is a key component of the MQA technology. They call this “structured encoding” and “encapsulation filtering”.

In the final paragraphs of the AES paper, they “recommend that modern digital recordings should employ a wideband encoding system which places specific emphasis on time and frequency and sampling at no less than 384 kHz.

More coming…

+++++++++++++++++++

I’m still looking to raise the $3700 needed to fund a booth at the 2015 International CES. I’ve received some very generous contributions but still need to raise additional funds. Please consider contributing any amount. I write these posts everyday in the hopes that readers will benefit from my network, knowledge and experience. I hope you consider them worth a few dollars. You can get additional information at my post of December 2, 2014. Thanks.

I am not sure I understand the technique, but from a conceptual point of view: if the studio people (sound engineers, producers and master engineers) accept to encode future recordings for the clientele that possess an MQA decoder (which I suppose is a software with perhaps a special chip), this may convince someone to encode music dynamics as well. The listener equipped with this different decoder could then restitute pre-programmed levels of audio dynamics, more suited for audiophiles or music lovers. Would this be a logical next step?

I believe MQA technology will focus on the vast catalogs of analog tape masters that are slowly making their way into high-resolution formats. I doubt that new productions will use MQA as a studio tool.

Maybe so

‘Analog tape masters….into high resolution formats’.

I don’t quite get that.

First they blow up the analog quality to 24/192, and then they use a new technology (MQA) to shrink these big files in order to make smaller files for streaming.

Why not just stick to CD quality or lossless (16/44.1) downloads/streaming for these ‘old productions’?

Their concern should rather be how to avoid mastering disasters – or how to make ‘new productions’ in high resolution quality.

Very good points! According to my own research and the advice of people who’s opinions I respect…96 kHz/24-bits is completely sufficient to get all of the fidelity from analog tape and new recordings.

Interesting. Data storage has become far less of a problem for me lately when it comes to storing HD Audio, so decreasing the file-size is a non-issue for me. However, it would be fantastic to be able to stream my HD Audio collection over the internet making my collection more accessible. Very cool 🙂

It’s the streaming part of this that might be very interesting.

Your analogy is lost on me. Quantization of a signal is a time domain process not a frequency domain one. An A/D does not have high frequency “bins” that are left empty. Taking the triangle analogy to the limit you’ve got about half your space left, i.e. 2X.

Anyone that claims lossless compression below the Shannon entropy limit is essentially claiming perpetual motion.

Quantization error is an amplitude error. The fact that there is a lot less energy applied to very high frequencies provides an opportunity to reduce the amount of data required to properly encode it. I’m reporting on the technical paper that Robert Stuart presented at the AES conference last fall.