There’s Music Above 20 kHz!

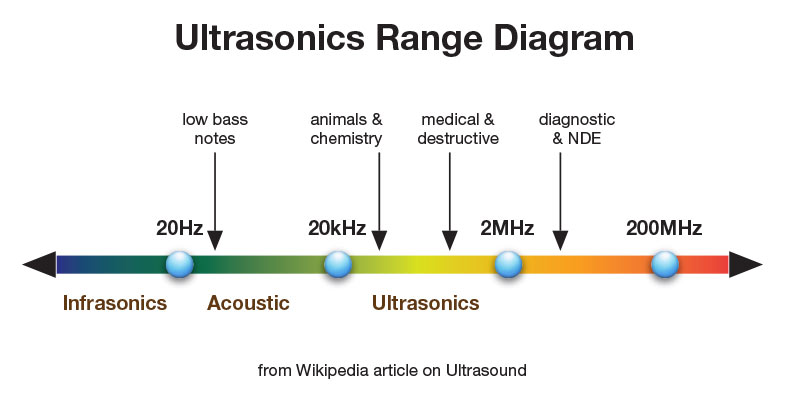

I use the term ultrasonic to describe the area of the audio spectrum that lies above 20 kHz. As was pointed out to by a reader in a recent comment, perhaps “ultrasonic” is an oxymoron. If ultra means “going beyond” and sonic means “relating to or using wound waves”, then maybe it is. A quick check of Wikipedia provides the following description and figure:

“Ultrasound is an oscillating sound pressure wave with a frequency greater than the upper limit of the human hearing range. Ultrasound is thus not separated from ‘normal’ (audible) sound based on differences in physical properties, only the fact that humans cannot hear it. Although this limit varies from person to person, it is approximately 20 kilohertz (20,000 hertz) in healthy, young adults. Ultrasound devices operate with frequencies from 20 kHz up to several gigahertz.”

Figure 1 – Illustration of frequency spectrum from infrasound to ultrasound with acoustics in the middle from Wikipedia [Click to enlarge]

Of course, this whole issue hinges on whether there is a line of demarcation between what is acoustic energy and what is ultrasound? Obviously, there isn’t. The generally accepted upper range of human hearing is conveniently stated as 20 kHz in healthy young adults. I have the sense that this is “convenient” because it’s easy to remember when stated as “20 to 20 kHz”. The actual top frequency is likely to range considerably.

Before arguing about whether it’s time to scrap the old definition and revise upwards the “audio band” of human hearing, let’s look at the frequencies that are output from the instrumental groups found in a traditional symphony orchestra. I was referred to a research project conducted at CalTech about 15 years ago. The resulting paper is titled:

There’s Life Above 20 Kilohertz!

A Survey of Musical Instrument Spectra to 102.4 KHz

James Boyk

California Institute of Technology

You can check out the paper by clicking here.

Here’s the abstract:

“At least one member of each instrument family (strings, woodwinds, brass and percussion) produces energy to 40 kHz or above, and the spectra of some instruments reach this work’s measurement limit of 102.4 kHz. Harmonics of muted trumpet extend to 80 kHz; violin and oboe, to above 40 kHz; and a cymbal crash was still strong at 100 kHz. In these particular examples, the proportion of energy above 20 kHz is, for the muted trumpet, 2 percent; violin, 0.04 percent; oboe, 0.01 percent; and cymbals, 40 percent. Instruments surveyed are trumpet with Harmon (“wah-wah”) and straight mutes; French horn muted, unmuted and bell up; violin sul ponticello and double-stopped; oboe; claves; triangle; a drum rimshot; crash cymbals; piano; jangling keys; and sibilant speech. A discussion of the significance of these results describes others’ work on perception of air- and bone-conducted ultrasound; and points out that even if ultrasound be [is] taken as having no effect on perception of live sound, yet its presence may still pose a problem to the audio equipment designer and recording engineer.”

I applaud the author on establishing that musical partials extend to well above the accepted upper limit of human hearing. It’s clear that we’re exposed to a lot of ultrasonic frequencies during a live performance of a symphony orchestra. Of course, the distances involved in this test are not like being in Carnegie Hall but it reveals something that I’ve known for a long time. Music doesn’t stop at 20 kHz…so why should our recording and reproduction systems?

I still have difficulty with your fundamental premise that the role of ‘proper’ Hi-Fi is to reproduce all the sounds that may have been present in the concert hall, including those we can’t hear. According to the figures in the paper you quote from, ultrasound accouts for a maximum of 2% of the overall sonic energy expended by one instrument. This is hardly significant. You are probably aware of the traditional arguments against including ultrasound, mainly to avoid interference with the audible part of the spectrum, leading to audible artefacts and distortion.

Presumably for completeness you would also want to record and include infrasonic sound – arising from such things as the lowest organ pedals, a pneumatic drill, traffic noise, a distant thunderstorm, etc. Infrasound is known to travel long distances, and I have a feeling that it would account for more, perhaps much more, of the overall sonic energy in the recording room than ultrasound. Reproducing infrasound accurately would however present some formidable engineering challenges in a domestic listening environment.

I am beginning to detect the whiff of snake oil on this whole ‘extended audio range’ subject.

I can’t possibly see the problem with capturing and reproducing all of the musical information in a performance space. Obviously, this doesn’t include subways going by or a distant thunderstorm. Are those things part of the composers intent AND part of the output from a crash cymbal, which according to the paper has 40% of its energy provided by ultrasonics. And we really don’t know it the .2% or the 2% are significant or not…time will tell but here are indications that these frequencies do impact our brains.

I choose not to ignore a portion of the spectrum that I consider important. For me this is component of true fidelity. It’s not that music can’t sound amazing without these frequencies but since the instruments make them, I want to deliver them. If would be snake oil if I was saying that my recordings have them when they in fact don’t.

The snake oil is in the idea that it makes a difference to the listener. The ear definitely cannot respond to it, the eye starts at 430 teraHertz, skin cannot detect vibration over 500 Hz. That leaves the paranormal channels as the only option.

And like Roderick says, infrasonics. Kick drums have energy there, I think, and modern syntho-sampled music is not limited in how low frequencies it can produce. So, if we want to be sure not to miss anything, we need to pick it up with mics, store it, and play it back with transducers.

The critical difference is that for infrasonics, we have a sensory input channel that can respond to it: our skin. No need for para-science. It would actually make a difference to the listener.

The ear may not be able to process ultrasonics but that’s not the question. The question is, “can ultrasonics be perceived?”, which is something else. And I’m with you on musical material in the infrasonic range…if there are tunes that dip below the 20 Hz range…why not?

Through which channel are we asking if ultrasonics can be perceived? There are only five. If the ear cannot send a single nerve impulse in response to it (proven), and skin ditto, we are running out of options.

Grant…you can insist on your point of view but until someone does a rigorous study answer the question, I’m going to keep an open mind.

If you google “subharmonics” you will find many articles.

Lin, thanks…I did some reading this morning on the topic. I was not aware of “subharmonics” or “undertones”. It is apparent that they are difficult to generate and are not considered part of the normal generation of musical tones…but I’ll continue my reading and may author a post about them.

I must admit that the amount of emphasis that is placed on ultrasonics is curious since we (humans) cannot hear sounds above 20KHz.

For example: On this site for a recording to be considered real HD audio there must be content on the recording above 20kHz. Another example: DSD is not a high definition medium since it cannot capture sound above 20kHz.

I agree with Grant–we should look at this from listener’s viewpoint.

In my opinion with limited resources available we should focus our engineering efforts on improving the capabilities to reproduce the auditory range, rather than the range of sounds that humans can’t hear.

The key word is “hear”. It may well be that humans perceive the ultrasonics or the result of ultrasonics on areas of “audio band”. In talking to other engineers about this AND being aware of analog signal processors that have controls at 40 kHz…I’m not ready to ignore a potential benefit of high-resolution recording. It costs nothing more to capture and release recordings that include ALL of the sound produced by acoustic instruments…so why not?

Your comment about human hearing limits (i.e. 20 kHz is only a guide) is IMHO more wishful thinking than proper knowledge. Please don’t start ‘smearing the truth by suggestion’, I hate that and it is really not your style. The upper range of human hearing does range considerably below 20 kHz, but it doesn’t range considerably above 20 kHz. You can safely treat 20 kHz as a rigid upper limit for all audio purposes. The comments you made about 20 kHz could fairly be made about 16 kHz, but not 20 kHz.

The issue with Boyk is not sections 1-9 (of course musical instruments can produce some energy above 20 kHz), but section 10, where he buys into Oohashi’s ‘hypersonic effect’ and thinks bone conduction is relevant. Bone conduction has a massive threshold issue, and Oohashi’s work has been accepted as an anomaly (probably experimental error) — except by audiophiles. Boyk’s paper strikes me as a classic example of persuasion by crooked thinking, where you say numerous true and demonstrable things in an impressively rigorous presentation, then slip in something much more dubious at the end, while still seeming to be logical and supported with evidence. In Boyk’s case, perhaps innocently, but definitely with a desire to find a result he already believes in. Don’t fall for it. Please.

I can expand via email if you wish.

The focus of today’s post was to point out that there is music above 20 kHz, not to endorse the work of Oohashi’s study or to comment on Boyk’s paper when he sums up about next steps. You can accept that 20 kHz is a “rigid upper limit” but I sincerely believe it hasn’t been established to my satisfaction. And from a purely intellectual point of view, I see no reason to ignore that area of the acoustic spectrum when we have the tools and formats to deliver it. I’m not aware of any convincing information that says music will have greater fidelity if I roll over everything above 20 kHz. On the contrary, I’ve been working on the new iTrax and going back to some positive comments from customers over the years…they’ve been over the moon with enthusiastic praise for our releases. I can’t help but think that leaving in the ultrasonic information might…just might…have something to do with it.

I’m not an audio engineer, but if a 10kHz fundamental can generate harmonics at 20kHz, 30kHz, etc, then won’t a fundamental at say 30kHz generate subharmonics at 15kHz, 10kHz, etc? These subharmonics are audible and would affect the overall sound. If our amplifiers and speakers roll off after 20kHz by design or with filters, then won’t the overall sound be affected? The current philosophy is that ultrasound is distortion, but maybe it is not the case? Those who have subwoofers that go real low and those with super tweeters that go real high rave about the improved sound. Sound quality is subjective after all, and not determined by 20Hz to 20kHz +- 3dB response. All this calls for more research, but being subjective, it may require a lot of A/B listening tests.

You’re right that under certain circumstances, musicians and specialized equipment can generate “sub harmonics” but they are not the norm and are not routinely generated when listening to recorded music.

I’m also the type of person that, if the technology is available, I would rather capture the largest spectrum of the musical frequency space that these technologies would allow. Of course, this capturing shouldn’t be done at the expense of the audible range, and I think the quality of many of Mark’s recordings attest to the fact that the fidelity can be easily maintained throughout the range.

Strangely enough, I must admit that I am on the other side of the coin regarding to the contribution of these frequencies in the auditory experience. I have to agree with Grant on this one; I’ve had a decent amount of education related to neuroscience and sensory perception. And while I am by no means the world’s expert in this particular field, a reasonable number of scientific studies over the past 60 years have shown a human cochlea’s membrane will simply not produce a deflection to a stimulus above 20 kHz (at least to a pure sine wave frequency). If there is no movement of this membrane, then it does not activate the respective auditory nerve cells and downstream nerve fibers, and therefore there is no perceptible sound. Since each section of this membrane is tuned to a specific frequency, then it really shouldn’t matter whether frequencies are presented individually or in concert. However, I’m not familiar enough with this area to know whether more complex frequency patterns, or the use of harmonics could have an alternate effect the cochlear response. I’m sure there has been a study on this given the efforts in improving cochlear implant devices.

So overall, based on the data available, I can be very accepting of 20 kHz as a strict upper limit for the overwhelming majority of humans. However, it is certainly true that musical savants are born with exceptional pitch and timbre distinction, and in some cases advantages in the frequency space, though so far I believe this has been related to acute frequency discrimination.

Anyway, just my Jekyll and Hyde thoughts on this…thanks for bringing this up, it’s quite an interesting topic.

Perhaps the cited instrumental ultrasonics, by themselves, are not audible in the conventional sense. But…

and I’m speculating here…perhaps when part of or combined into the conventional audible signature of music compete the sonic signature of the instrument. I know that many audiophile mags have often stated how hard it is to get cymbals and vocal silibance “right.” Perhaps ultrasonics are part of the answer?

I think you’re right to remain open minded on the whole question of ultrasonics…there might be something going on there. But I do accept that it’s most likely not through normal hearing.

So I had a crazy thought. We all agree that there is some limit to our hearing. Let’s pick 23kHz for now. Do you suppose that the presence of a 45kHz signal along with the information that we can hear, influences (distorts) what we can hear? Any thoughts on how that could be objectively tested?

This is the Rupert Neve experiment that I mentioned in a post some time ago. I’m still moving forward with the idea and will post some new tones soon.

Can you point to the post? I’d like to read it. I have some ideas how you might at least prove plausibility.

Here’s to the link that talks about the Neve investigation. http://www.realhd-audio.com/?p=2035

Thanks, Mark. I replied on that post tonight.

I’ve been thinking about your position that if a live performance produces frequencies outside the accepted range of human hearing (20Hz-20kHz), then this should still be presented to the recorded music listener. Have you ever considered whether the superheterodyne principle might allow frequencies outside our normally detectable range, to influence our perception?

Some Wikipedia links:

http://en.wikipedia.org/wiki/Superheterodyne_receiver

http://en.wikipedia.org/wiki/Intermediate_frequency

http://en.wikipedia.org/wiki/Beat_frequency

If both a 30kHz and 31kHz sine waves were sent to individual, or separate, speakers capable of reproducing those frequencies, would a 1kHz beat frequency be detectable? How about a 70kHz and 80kHz combo producing a 10kHz beat frequency? If that worked then perhaps even infrasound might be detectable to the ears as well! The speakers would probably have to be capable of producing these frequencies at high volume to be detectable though.